Meta Learning and MAML(Model Aganostic Meta Learning)

This blogpost will talk about Meta Learning and a very intuitive Meta Learning Algorithm namely Model Aganostic Meta Learning(MAML) and a couple of it’s variants.

Meta Learning (“Learning To Learn”)

Many problems of interest require rapid inference from small quantities of data. We should adopt learning strategies such that the single/few observations should result in abrupt shifts in behavior(single/few shot learning). When new data is encountered, the models must inefficiently relearn their parameters to adequately incorporate the new information without catastrophic interference.

For example a robot designed to clean nuclear wastes, encounters a wide variety of tasks with many objects. It should be able to amortize their experience from previous learnt skills and improve data efficiency in acquiring new skills rather than Learning each skill from scratch.

Another example would be malware vs clean classification task, where a classification engine has to adapt to the new malware variants being released each day. You would typically have few example per class for a new day(here the new task is classifying between malware and clean files encountered that particular day) and requires meta-learning for “few shot classification”. (This would be an interesting application of meta learning to try out)

Inspired from nature ? This kind of flexible adaptation is a celebrated aspect of human learning (Jankowski et al., 2011), manifesting in settings ranging from motor control (Braun et al., 2009) to the acquisition of abstract concepts (Lake et al., 2015). Generating novel behavior based on inference from a few scraps of information – e.g., inferring the full range of applicability for a new word, heard in only one or two contexts – is something that has remained stubbornly beyond the reach of contemporary machine intelligence.

Why not Deep Learning ? In situations when only a few training examples are presented one-by-one, a straightforward gradient-based solution like Deep Neural Network is to completely re-learn the parameters from the data available at the moment. Such a strategy is prone to poor learning, and/or catastrophic interference. In view of these hazards, non-parametric methods are often considered to be better suited.

General Meta Learning Framework

Meta-learning generally refers to a scenario in which an agent learns at two levels, each associated with different time scales. Rapid learning/Task Specific Learning occurs within a task, for example, when learning to accurately classify within a particular dataset. This learning is guided by knowledge accrued more gradually across tasks, which captures the way in which task structure varies across target domains. Given its two-tiered organization, this form of meta learning is often described as “learning to learn”. This task aganostic learning helps in quick adaptations to new tasks.

This means during the meta-learning we need to provide the learning algorithm an information(encoding) about the task/context in some form, along with the training samples asossiated with each task. So how do we feed data to a meta-learner ?

During meta-learning we provide a support/context $D_{support}$ along with the test sample.

What is $D_{support}$?: $D_{support}$ can be defined as a set of $(x_i,y_i)$ tuples where $x_i$ is input/observation and $y_i$ is the output/reward. $D_{support}$ provides a context(information about task to be performed) with respect to the test sample. $D_{support}$ usually tends to have less number of samples(few shot) per class.

Model Aganostic Meta Learning

The two key features of MAML are,

1) it makes gradient based solutions good at “few shot learning”. 2) it is model aganostic in the sense that it can be applied to any learning algorithm/model(classification, regression and reinforcment learning) that uses a gradient descent based optimization.

Note: There are recent papers that extends maml for UnSupervised and Semi-Supervised tasks.

As discussed earlier this meta learning procedure has 2 stages.

In the task specific update stage, corresponding to each task the model parameters are updated using the task specific dataset.

Update 1 \[ \theta_i^{*}=\theta + \alpha\times \nabla_\theta L_i(\theta,y_i) \]

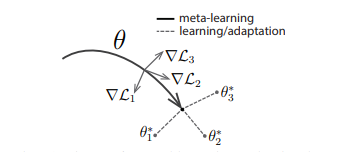

Next is the meta update stage, where we try to find a genaralist structure among these tasks such that updated parameter is always closer to each of the task specific optimal parameter, $\theta_i$(See Figure 1). In effect the parameter gets updated in the meta update in such a manner that it is one(few) steps away from doing well at each one of the tasks. This is achieved by optimizing for the performance of $f_{\theta_i^*}$ with respect to $\theta$ across the tasks as follows:

Update 2 \[ \begin{aligned} \theta = & \theta + \beta\times\sum_i^NL_i(f_{\color{red}{\theta_i^*}},y_i)

= &\theta + \beta \nabla_\theta \sum_i^NL_i(f_{\color{red}{\theta + \alpha\times \nabla_\theta L_i(\theta,y_i)}},y_i) \end{aligned} \]

Another way of looking at MAML: The meta optimization is performed over the model parameters $\theta$ but the objective is computed using the updated models $f_{\theta_i^*}$. Thus we update $\theta$ such that it learns to get a low classification error/high reward in the next step rather than this step. This makes the model parameter $\theta$ to be more sensitive to changes in tasks such that a small changes in the parameter can produce large improvements in the direction of the task with respect to which it is tuned for.

Does MAML falls in the general meta learning framework ?: Yes. It does. The task specific learning happens in update 1 and meta-learning happens in update 2.

How to train? “You get good at what you practice”. Thus the training time protocol used is same as the testing time protocol, as in O. Vinyal’s(2016). Split each data/demonstrations for each individual task into training and validation pair. Use one of these for Update 1(task specific learning) and the other for Update 2(meta-learning).

An Illustration

Unsupervised Extension

Refer these One-Shot Visual Imitation Learning via Meta-Learning , Semi-Supervised Few-Shot Learning with MAML. I will write on these shortly.

References

- C. Finn et al. 2017 Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks

- C. Finn et al. 2017 One-Shot Visual Imitation Learning via Meta-Learning

- Semi-Supervised Few-Shot Learning with MAML

- O. Vinyals, 2016, Matching Networks for One Shot Learning

Enjoy Reading This Article?

Here are some more articles you might like to read next: